Lesson 1 - A very fast paced introduction to the foundations

How to view probability from a measure theoretic perspective.

Optional reading for this lesson

- Oksendal - Stochastic Differential Equations

- Särkkä & Solin - Applied Stochastic Differential Equations, chapter 3 and 4

- Ludwig Winkler - Solving Some SDEs (blogpost)

- Math3ma - Dominated Convergence Theorem

Slides

Video (soon)

In this lecture we give a very fast paced introduction to a perspective on probability some of you might have encountered, some of you might have not: measure theory. We will also dive into stochastic calculus and stochastic differential equations. This is a very dense lecture and we will not be able to cover everything in detail. The goal is to give you a feeling for the concepts and to give you a starting point for further reading.

- Optional reading for this lesson

- Slides

- Video (soon)

- 1. Measure Theory and Probability

- 2. Stochastic Processes and Martingales

- 3. Brownian Motion

- 4. ODEs

- 5. SDEs

- 6. Ito Integral

- 7. Ito’s Lemma

- 8. Solving linear SDEs with Ito’s Lemma

- 9. Ornstein-Uhlenbeck Process

- 10. Solving non-linear SDEs numerically

- Credits

1. Measure Theory and Probability

This first section is mostly taken from Francisco’s MPhil thesis available here.

For many of the technical derivations in the methodology we will study, a basic notion of measure and integration is required, thus we will refresh concepts such as $\sigma$-algebra, probability measures, measurable functions and the Lebesgue–Stieltjes integral, without going into unnecessary technical detail.

1.1 Probability Spaces

Definition: A probability space is defined as a 3-element tuple , where:

- is the sample space, i.e. the set of possible outcomes. For example, for a coin toss .

- The -algebra represents the set of events we may want to consider. Continuing the coin toss example, we may have .

- A probability measure is a function which assigns a number in to any event (set) in the -algebra . The function has the following requirements:

- -additive, which means that if and , then .

- , which we can read as the sample space summing up to (integrating) to 1. Without this condition would be a regular measure.

Note that we want to be able to measure (assign a probability to) each set in rather than , since the latter is not always possible. In other words, one can construct sets that cannot be measured (or can only be measured by the trivial measure , which is not a probability measure). Thus, we require to only contain measurable sets and this is what a -algebra guarantees.

Definition: -algebra is a collection of sets satisfying the property:

- contains : .

- is closed under complements: if , then .

- is closed under countable union: if , then .

Note we use the notation for the Borel -algebra of , which we can think of as the canonical -algebra for – it is the most compact representation of all measurable sets in . This notation can also be extended for subsets of and more generally to any topological space.

1.2 Random Variables

Definition: For a probability space , a real-valued random variable (vector) is a function , requiring that is a measurable function, meaning that the pre-image of lies within the -algebra :

The above formalism of the random variable allows us to assign a numerical representation to outcomes in . The clear advantage is that we can now ask questions such as what is the probability that is contained within a set :

and if we consider the more familiar 1D example, we recover the cumulative distribution function (CDF):

The random-variable formalism provides us with a more clear connection between the probability measure and the familiar CDF. For simplicity in some cases, we may drop the argument from the random variable notation (e.g. ).

1.3 Lebesgue–Stieltjes Integral

Here we offer a pragmatic introduction to the Lebesgue–Stieltjes integral, in the context of a probability space.

For a probability measure space , a measurable function and , the Lebesgue–Stieltjes integral is a Lebesgue integral with respect to the probability measure .

Whilst we have not yet introduced a precise definition for the Lebesgue integral, we will now illustrate some of its properties that give us a grasp of this seemingly new notation. Expectations in our probability space can be written as

Let be the indicator function for set , then

The above result is a useful example, since it shows how a distribution (probability measure) is defined in terms of the integral. This is effectively the definition of a cumulative density function. When our distribution admits a probability density function (PDF) , we have the following: \begin{align} \int_{\Omega} f(\bm{x}) d\mathbb{P}(\bm{x}) = \int_{\Omega} f(\bm{x})p(\bm{x}) d\lambda(\bm{x}), \end{align} where is the Lesbesgue measure, and we can think of it as the characteristic measure for . For many purposes, we can interpret as the regular Riemann integral and in many cases authors \citep{williams2006gaussian} use notationally when the integral is with respect to the Lebesgue measure.

An important takeaway is that, whilst connecting the Lebesgue integral to the standard Riemann integral gives us a useful conceptual connection, it is not always something that can be done. As we will soon see, many random processes do not admit a PDF. In order to be able to compute expectations with respect to these processes, we must adopt the Lebesgue-Stieltjes integral, which is well-defined in these settings, while the standard Riemann integral is not.

2. Stochastic Processes and Martingales

Now let’s talk about stochastic processes, where we thing about dynamic random variables that evolve over time. Let T be a set of times.

Definition: A stochastic process is a collection of random variables defined on a common probability space .

We can think of a stochastic process as a function that maps a time and a sample point to a real number .

Definition: A filtration is a family of sub-sigma algebras of such that for .

We see that the sub-sigma algebras are nested, i.e. . They also get thinner over time, meaning that the information we have about the process increases over time. This is a natural property of stochastic processes, since we can only observe the process over time and therefore only get more information about it over time.

Definition: A stochastic process is adapted to a filtration if is -measurable for all .

This means that the random variable is measurable with respect to the sigma algebra , i.e. we can observe the value of at time by observing the sigma algebra . Adapted processes are also called non-anticipating processes, since we cannot anticipate the value of at time by observing the sigma algebra for . In other words, we cannot see into the future; is only know at time .

An additional key idea contained in this is that the sigma algebra contains all the information we have about the process up to time , i.e. it describes not only the value of at time , but also everything before it.

From these definitions, we can now define the concept of a martingale. Intuitively, a martingale is a stochastic process that has constant expected value over time. Let’s see how we can define this formally.

Definition: A stochastic process is a martingale with respect to a filtration if:

- is integrable for all

- is adapted to for all

- for all .

The last statement is equivalent to since and we can therefore pull into the expectation. This means that the expected value of the change in from time to time is zero. This means that the expected value of at time is equal to the expected value of at time , i.e. the expected value of is constant over time. This is the key property of a martingale.

3. Brownian Motion

Before we finally come to stochastic differential equations, let’s talk about Brownian motion. Brownian motion is a stochastic process that is a martingale and is central to Ito integration theory which we will discuss a lot about later, so it is worth dwelling on it a bit.

Definition: A stochastic process is a Brownian motion if:

- (process starts at )

- is almost surely continuous

- has independent increments ( is independent of )

- (for )

From this definition, we can derive some properties of Brownian motion:

- (since follows a centered normal distribution)

- . This is a key property of Brownian motion and is called the quadratic variation of Brownian motion. It is the reason why we cannot ignore the second term in the Taylor expansion of Ito’s lemma as we will later see. We can show this by noting that since .

- is a martingale. The first two martingale properties are easy to show: is integrable for all since it follows a normal distribution and it is -adapted. For the third martingale property, we make use of a common trick when working with Brownian motion: we try to an increment appear in an expectation and then use that this is 0 by definition of Brownian motion. We can write:

In the following, whenever we talk about Brownian motion we either use or as notation.

4. ODEs

Now that we have a measure theoretic perspective on probability and some feeling for what stochastic processes are, we can start to think about how to define stochastic differential equations.

To do this, let’s first remember what an ordinary differential equation is. An ordinary differential equation is a differential equation that contains one or more functions of one independent variable and the derivatives of those functions. The term ordinary is used in contrast with the term partial differential equation which may be with respect to more than one independent variable. An example ODE is the following:

This is a first order ODE, because it contains the first derivative of . It is also a linear ODE, because it is linear in . It is also an time-invariant ODE, because it does not depend on .

In general, we often write linear differential equations like this:

where is a function of time, is the state of the system at time , is a function of time and is some forcing or driving function; in applications, this coud for example be an input to a system.

A useful special case of this are linear time-invariant differential equations:

where and are constant. We can solve such equations via methods like separation of variables or, for the case of inhomgeneous equations, via the integrating factor method.

For general linear differential equations, we can solve them via methods like Fourier or Laplace transforms or via numerical methods. Linear differential equations are useful to consider since they arise in many equations and can be solved analytically. They will also be useful for us to consider when we think about stochastic differential equations.

5. SDEs

A pragmatic way to think about stochastic differential equations is to think about them as ODEs with some noise added. For example, let’s think about a car in 2 dimensions that is governed by the following ODE resulting from Newton’s second law:

where is the force acting on the car, is the mass of the car, is the acceleration of the car and is the position of the car.

Let’s say that our acceleration is now not precisely known/we have a measurement error in our acceleration. We can model this by adding some noise to our acceleration and descrie it by a random white-noise process :

Now if we define and we can write this as a system of first order ODEs in our old familiar form:

where the first matrix is and the second matrix is . This is a linear time-invariant differential equation where the forcing function is not deterministic but a stochastic process: a stochastic differential equation.

Let’s try to solve such an equation heuristically with initial condition . If we pretend that is deterministic and continuous (which it is not), we can solve this equation like we would solve any other ODE. We can write the solution via the method of integrating factor as:

where is the initial time and is the matrix exponential. Let us continue to pretend that is deterministic and continuous. Then taking expectation on both side yields:

where we used in the first step that since this is one of the properties of the white noise process and in the second step that since this was our initial condition.

We can also get a covariance equation by recalling the Dirac delta correlation proeprty of the white noise process with being the spectral density of the white noise process. Then we can write (for and ):

where in step 3 we used and .

We can now get the the differential equations for the mean and covariance by taking the derivative of the mean and covariance equations with respect to (where we denote and ):

So far, so good: these equations are actually the correct differential equations for the mean and covariance. So can we treat SDEs just like ODEs? No, because we were just lucky in this case that (1) the SDE here was linear and (2) we did not use any of the calculus rules that are different in the stochastic setting. We cannot stretch this heuristic treatment to nonlinear SDEs and we cannot use it for more complex expressions.

To see how quickly this treatment can go wrong, let’s try to get the differential equations for the mean and covariance just by directly differentiating the original equation for mean and covariance and only afterwards taking the expectation, which should not make a difference. For the mean, we indeed get the same result:

When we try the same thing for the covariance, we get the following:

We see that the equation is wrong, it is missing the last term. This is because we cannot treat the stochastic process as a regular function and therefore cannot use the product rule.

6. Ito Integral

This seems easy enough, we can just solve this like we would solve any other ODE, right? Well, not quite. The problem is that the noise process is not a regular function, but a random process. This means that we cannot just integrate it like we would integrate a regular function. We need a new way to integrate random processes. This is where the Ito integral comes in.

To see the problem, let’s consider

7. Ito’s Lemma

So far we defined the stochastic integral in the Ito formulation. Now we want to see how we can solve these integrals in order to make progress with a proper treatment of our SDEs beyond the heuristic treatment we did before. To do this, we will use Ito’s lemma, which is a generalization of the chain rule for stochastic processes. It basically is a Taylor expansiona adapted to the stochastic world and a key tool in stochastic calculus.

Let’s sketch how we get to Ito’s lemma by considering a Taylor series for a function around in ordinary calculus:

Example:

However, for ordinary functions, will be very small since our partitions are very small to begin with. The corresponding change in function value that this term induces will be very small since our function is continuous and differentiable.

Therefore, we can ignore the second term and write:

How does this look like in stochastic calculus? We again consider the change in , but now we consider to be a stochastic process and therefore denote it is . We can write the change in as:

\frac{\partial f(X_t)}{\partial X_t} dX_t + \frac{1}{2} \frac{\partial^2 f(X_t)}{\partial X_t^2} (dX_t)^2$$

where is the change in and is the quadratic variation of . Now in a stochastic process like Brownian motion, the quadratic variation in the limit of infinitely small partitions is not converging to zero, but to the time step (we have described this before in the properties of Brownian motion). Therefore, we cannot ignore the second term in the Taylor expansion and have to keep it. This is the key insight of Ito’s lemma.

We can use Ito’s lemma for all Ito processes.

Definition: An Ito process is an adapted stochastic process that can be expressed as the sum of an integral with respect to time and an integral with respect to a Brownian motion :

Here is the drift term and is the diffusion term. We can think of the drift term as the deterministic part of the process and the diffusion term as the stochastic part of the process.

Now we can formulate what Ito’s lemma is for these Ito processes:

Ito’s lemma: Let be an Ito process and be a function of and that is twice continuously differentiable with respect to and . Then is also an Ito process, can be denoted and we can write:

where we can use as a simpler notation. This is the stochastic version of the Taylor expansion we saw before. We can also write this in integral form:

where the first term is the initial value of , the second term is the deterministic part of the process, the third term is the stochastic part of the process and the fourth term is the correction term that arises from the quadratic variation of the stochastic process.

Another version of Ito’s lemma in its differential form that you will often see is the following:

Here we used the original differential form, substituted with the Ito process, with the quadratic variation of the Ito process and then simplified the resulting expression by grouping and terms.

Ito’s lemma can also be generalized to higher dimensions. Written it matrix from, it looks like this:

where is a -dimensional Ito process and is a function of and that is twice continuously differentiable with respect to and .

8. Solving linear SDEs with Ito’s Lemma

Now that we have a practical formula in our hand, let’s use it to solve some linear SDE equations.

Definition: A geometric Brownian motion (GBM, also known as exponential Brownian motion) is a continuous-time stochastic process that is defined by the following stochastic differential equation:

where is the drift of the process, is the volatility of the process and is a Brownian motion. Both and are constants.

Compared to the general Ito process, we added the on the right hand side as a multiplicative factor to the drift and diffusion term.

Here we see the first problem when solving SDEs: we have on the right-hand side of the equation. Simply integrating won’t work. We need to use Ito’s lemma to solve this. For this, we need choose a good Ansatz for our transformation function so that it will be easy to solve the resulting equation. If we divide both sides by , we can write this as:

In this case, we choose since the on the left hand side hints at the derivative of a logarithm. This transformation is a good choice since it will make the on the right-hand side of the original equation disappear due to the derivative of it being . Let us consider the three kinds of derivatives that we need to compute:

With this, we can write Ito’s lemma for our transformation function as:

Now we can substitute with the original equation. To substitute , we need to use the rules for differentials in Ito calculus. We will talk about these more later, but for now we can use the following rules:

With this, we can write:

where we used the rules above to simplify the expression. Now we can substitute this into our equation for :

We can see that the on the right-hand side disappeared and X_t only appears implicitly on the right hand side via . This is the key insight of Ito’s lemma: we can transform a stochastic differential equation into an easier to solve differential equation by choosing a good transformation function .

Now we can solve this equation by integrating both sides:

where we used that and .

This is the solution to the geometric Brownian motion. We can see that the solution is a log-normal distribution. This is a very important distribution that will appear again in the course, for example when we talk about the Girsanov theorem.

We can look at the interesting special case when our process has no drift, i.e. :

We can see that the integral on the right hand side is an integral martingale and therefore conclude that X_t is a martingale with constant expected value. This is a very important property of the log-normal distribution that we will see again later.

We can look at this in code by simulating a geometric Brownian motion numerically:

# file: "geometric_brownian_motion.py"

import numpy as np

import matplotlib.pyplot as plt

M = 10 # number of simulations

t = 10 # Time

n = 100 # steps we want to see

dt = t/n # time step

#simulating the brownian motion

steps = np.random.normal(0, np.sqrt(dt), size=(M, n)).T

origin = np.zeros((1,M))

bm_paths = np.concatenate([origin, steps]).cumsum(axis=0)

time = np.linspace(0,t,n+1)

tt = np.full(shape=(M, n+1), fill_value=time)

tt = tt.T

plt.plot(tt,bm_paths)

plt.xlabel("Years (t)")

plt.ylabel("Move")

plt.show()

# change time steps to 1,000,000 to observe same quadratic variation along paths

[quadratic_variation(path) for path in bm_paths.T[:4]]

# output:

# change simulations to 100,000 to observe convergence of variance to Time at a particular time step

[variance(path) for path in bm_paths[1:11]]

# output:

9. Ornstein-Uhlenbeck Process

Now let’s look at another example of a linear SDE: the Ornstein-Uhlenbeck process. This is a process that is often used to model the velocity of a particle under the influence of friction. It is defined by the following SDE:

where is the friction coefficient, is the drift term and is the volatility.

Let’s solve this equation in general. We can again use Ito’s lemma to solve this equation. We first see that is intended as the long-term mean of the process X_t. We can therefore choose as our transformation function. We then get the following differential:

where we used that . We can see that the solution is a linear combination of a deterministic part and a stochastic part. The deterministic part is the long-term mean plus a term that decays exponentially to the long-term mean with a time constant of . The stochastic part is a Brownian motion that is weighted by a factor that decays exponentially to zero with a time constant of .

If we look at this equation for a bit, we can see that we are equating the change in to a multiple of itself plus some noise. If we ignore the noise, this pattern of (roughly speaking) is strikingly similar to the classic 1st order ODE with the exponential function as a solution.

We can therefore do another change of variables and define as our new transformation function. We can then write:

To solve this, we can use Ito’s lemma again. We first compute the derivatives:

where we used that .

Now we just have to undo the change of variables and we first get the solution for and then for :

We now focus on the case where . In addition, we choose a parameterization where and for reasons that will become clear later (mostly that our solutions come out nicely). With this, our solution for with intial condition becomes:

where is a Brownian motion with variance .

From this solution we can again get some intuition about the process by poking a bit with it. First, we can see that the process is mean-reverting. This is because the process is pulled back to the mean with a time constant of . It is also the only nontrivial process that is at the same time a Gaussian prcess, a Markov process and temporally homogeneous. To see that the stationary distribution is indeed a Gaussian distribution, we can compute the mean and the variance of the process and then evaluate it in the limit of :

where we used

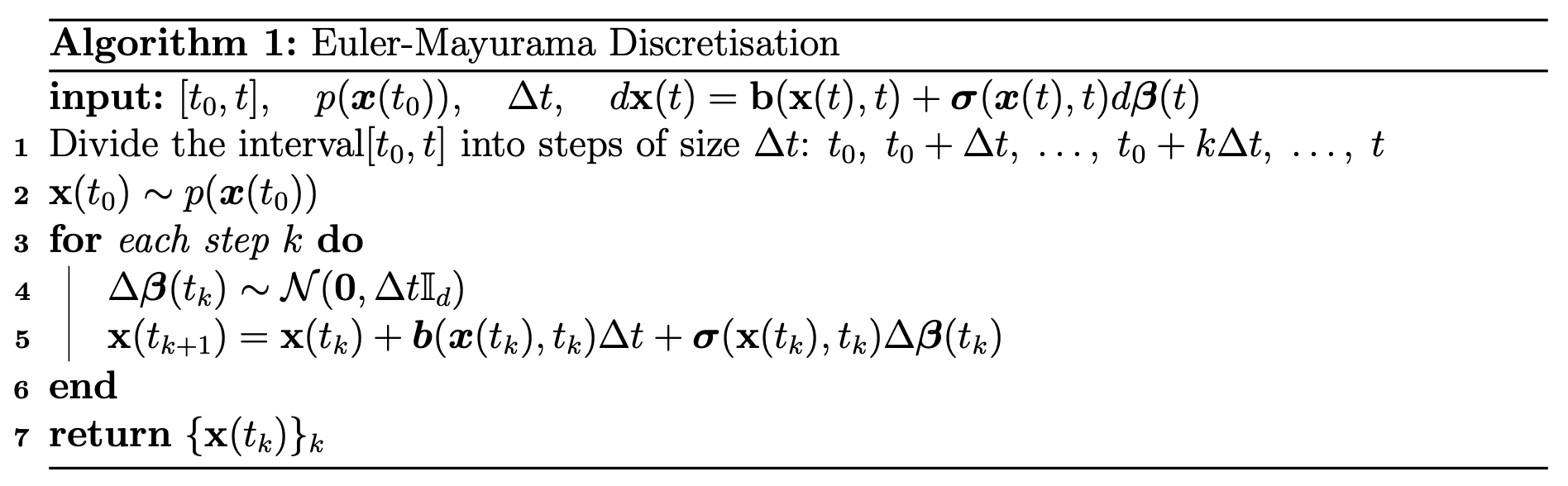

10. Solving non-linear SDEs numerically

So far we only looked at linear SDEs and how to solve them. Can we do something similar for non-linear SDEs? The answer is sadly no, but since we care about computers and have to discretise our eqyaution anyway, we can use the Euler-Maruyama method to solve non-linear SDEs numerically. This is a numerical method that is similar to the Euler method for ODEs, but adapted to the stochastic setting.

You will see in the next lecture that many practical implementations of diffusion models correspond to Euler-Maruyama discretisations of SDEs.

Credits

Code examples adapted from QuantPy. Much of the logic in the lecture is based on the Oksendal as well as the Särkkä & Solin book. Title image from Wikipedia